The Challenge

If you have worked closely with self-hosted/non-cloud databases, you will likely be familiar with the challenge of upgrading, load balancing, and failing over relational databases without having to touch client applications. At Goldman Sachs (GS), we work extensively with relational databases which do data processing, batches, and UI connect via JDBC (TCP layer). Some of these databases do not provide a suitable load balancing and failover mechanism out of the box that works for our use cases. To deal with load balancing and failovers, the best solution would be to place the database behind a Domain Name System (DNS) layer and have the applications connect via the DNS. However, this can cause problems when the port of the database needs to change. Changing the port or the host requires extensive coordination and testing efforts across multiple client applications. The level of effort is directly proportional to the nature/breadth of the database usage. Our databases are used extensively, so changing ports and testing them is not very efficient or feasible. Thus, in order to improve resource utilization, save time during database failovers, and improve developer efficiency, we looked into using HAProxy to solve this challenge.

HAProxy has been used extensively in the industry as a web application layer load balancer and gateway for the past 20 years. It is known to scale well, widely used, and well documented. The fact that it has been open sourced means that you can use, contribute, and adapt it for your own purposes. This blog post focuses on using HAProxy as a JDBC/TCP layer database load balancer and fast failover technique. We will be using Sybase IQ as an example here but the approach can be extended as-is to other databases. Please note that this is just one solution to this specific problem; there are other available solutions.

How We Use Sybase IQ

Sybase IQ provides suitable query performance without doing much database optimization making it capable of serving internal reporting dashboards with sub-second-long response times along with ad-hoc querying purposes with minute-long response times for terabytes of data. For this use case, we have two multiplex databases being used in a live-live mode; each multiplex has 3 nodes, one on each cluster has been specifically earmarked for write purposes. Sybase IQ multiplexes have a hybrid cluster architecture that involves both shared and local storage. Local storage is used for catalog metadata, temporary data, and transaction logs. The shared IQ store and shared temporary store are common to all servers. This means that you can write via one node and the data will be available across all nodes on the cluster.

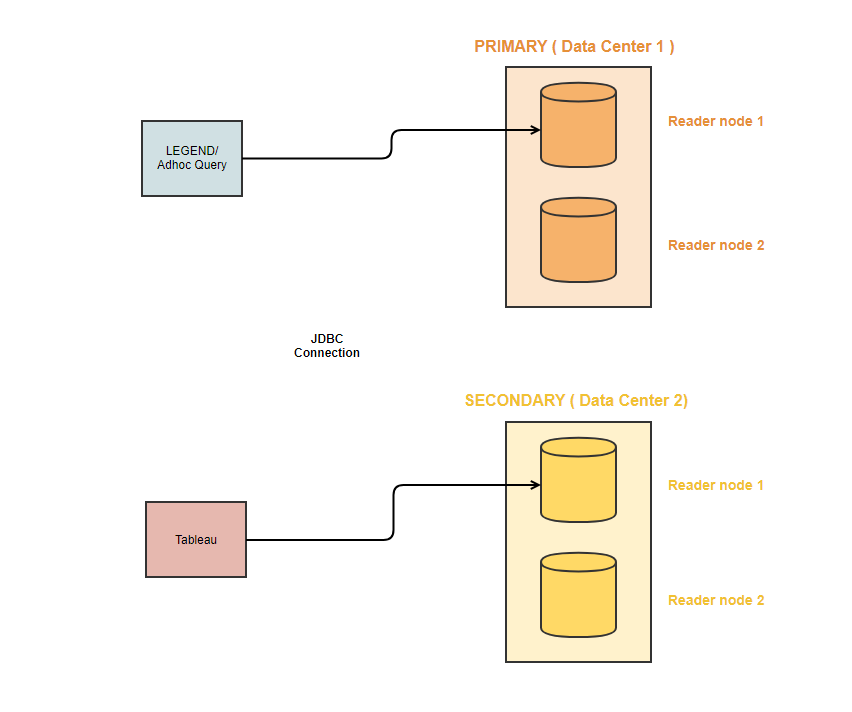

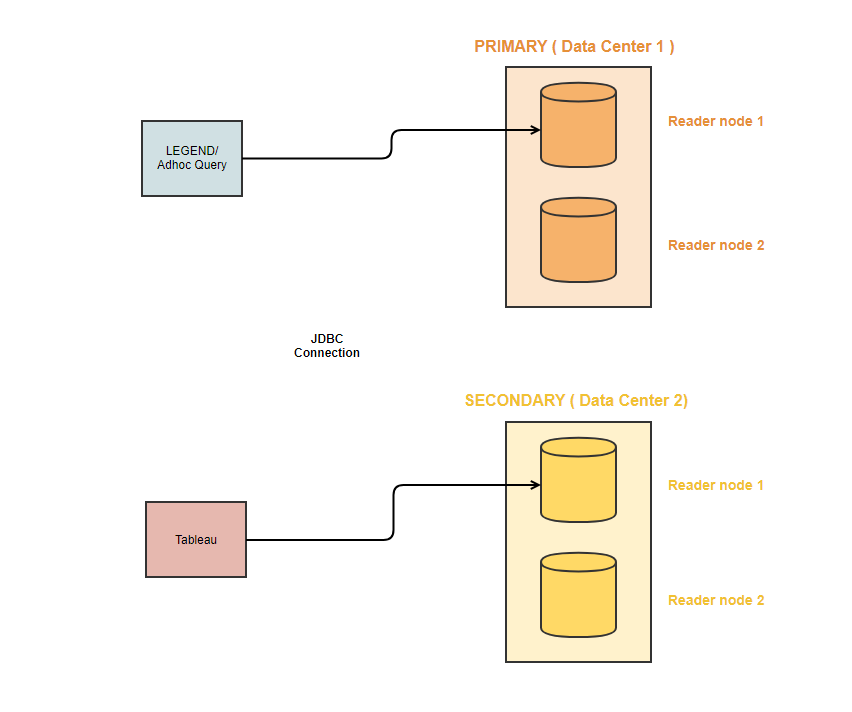

For this deployment, two nodes on each cluster are used for read-only purposes. The writer framework guarantees eventual consistency across the clusters housed in different data centers. Only the data that has been written to both of the clusters can be read via the reader nodes. The data from these reader nodes is read via queries/visualization platforms such as: Tableau, Ad-hoc SQL Queries, or Legend (open source data modeling platform - referred to as readers going forward).

Prior Setup and Use Cases

- Tableau dashboards are used for canned reports and drilldowns. The set of queries fired by these dashboards are fixed and are usually served by materialized datasets, i.e., we rarely fire any queries from Tableau that have joins as they can slow down the performance. We promise sub-second SLAs for such queries.

- Ad-hoc queries are fired from the Legend setup where users can explore their own or custom models. This means that we cannot really optimize such queries and thus promise response time in a few minutes.

- Direct SQLs are also fired by our developers to debug production issues.

- The JDBC connection string in Tableau was hard-coded to use the reader node 1 of the secondary cluster whereas it was hard-coded in Legend to use the reader node 1 of the primary cluster. Developers can use any reader node of their choice.

Figure 1: Tight coupling of applications to specific reader nodes.

Areas for Improvement

- We needed our Sybase IQ cluster to serve queries with 99.9% availability during business hours.

- Due to applications being tightly coupled with the reader nodes (Figure 1), most of the load was being directed to one node. To cope with each query, Sybase IQ tries to provide the maximum amount of resources to each query, and as a result, any subsequent queries will need to wait until the first query is completed.

- Sybase IQ does not have built-in load balancing or failover, leading to saturated hosts and errors if there are too many queries, or the queries take too long to resolve.

- If the primary reader nodes go down, the time it takes to point to another node may impact our SLOs.

Sybase IQ allows queries to run across multiple nodes at once, enabling parallelism. In our use case, we wanted load segregation and fault tolerance. This requires individual nodes to serve their own queries, which would require an external load balancer. The need to use external load balancers for query/load segregation and faster failovers has been well documented here and here on the SAP blogs.

New Architecture Considerations

- Support mechanisms to route queries between primary and secondary clusters and to switch query routing to another cluster.

- Support mechanisms to shut down unhealthy clusters for reads and writes. Ideally, all writes are rerouted to a healthy cluster.

- Dynamically update the weights of IQ nodes in a round-robin way behind proxies, based on their health (CPU/ability to connect).

- This architecture targets Sybase IQ but can easily be used with any other database such as DB2, Sybase ASE, etc. This is because the HAProxy configuration isn't specific to a database and can work with any database at the TCP/JDBC level.

Solution

HAProxy is a free, fast, open source, and reliable solution offering high availability, load balancing, and proxying for TCP and HTTP-based applications. Over the years it has become the de facto standard open source load balancer, is shipped with most mainstream Linux distributions, and is often deployed by default in cloud platforms.

Given the JDBC connections to the database in our use case, we needed a load balancer and gateway solution that could work at the TCP(L4) layer and is known to be stable within the domain. After evaluating multiple solutions, we decided to use HAProxy as one of the possible proxy solutions.

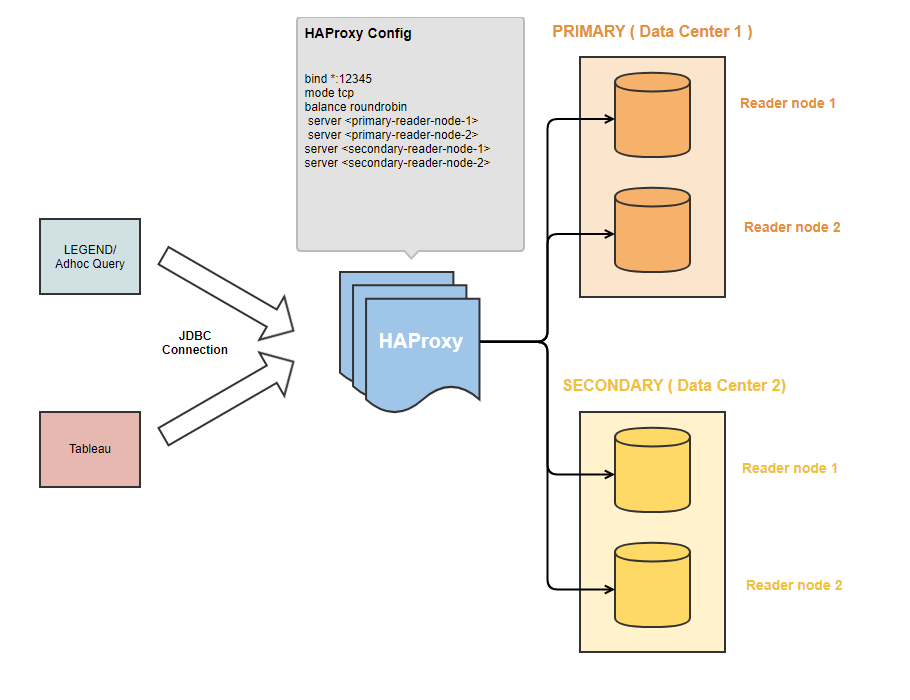

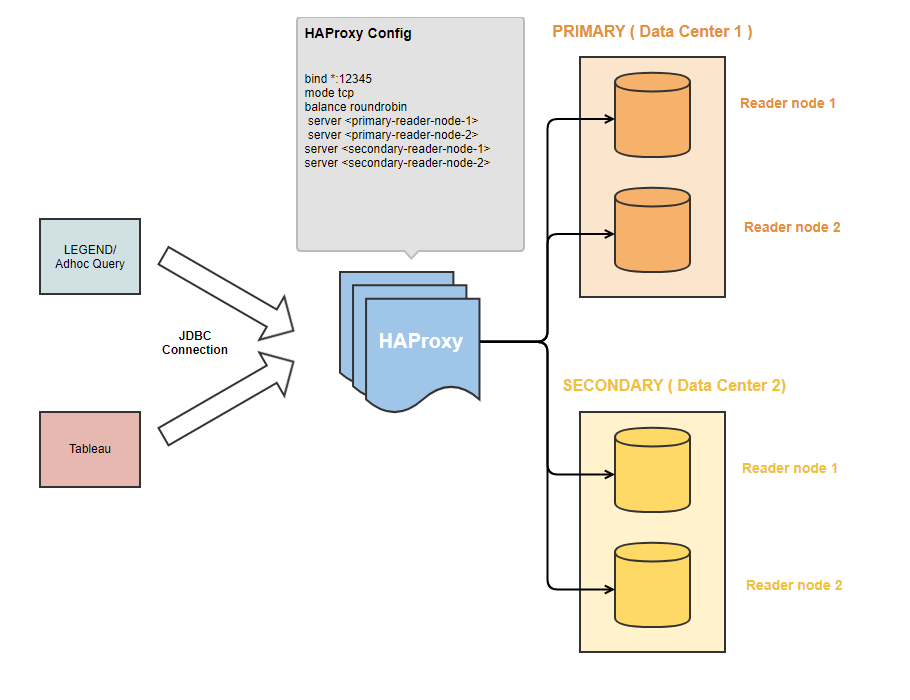

Figure 2: New architecture with HAProxy.

As seen in Figure 2, we installed HAProxy in TCP mode and redirected all of our readers to come via HAProxy itself. The readers will behave as if they are connecting to the Sybase IQ database directly and quickly using the existing JDBC client driver (JConnect or SQL Anywhere), but in reality, the HAProxy is in between, sorting the queries to available nodes.

Here are additional details on how to set up HAProxy:

- Command for creating an HAProxy instance on Unix: <path_to_HAProxy_installation> -f <path_to_HAProxyConfig.cfg>

Configurations

- HAProxyConfig.cfg – file which is deployed on the server using a Terraform equivalent.

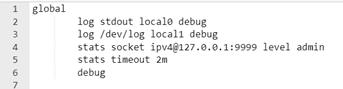

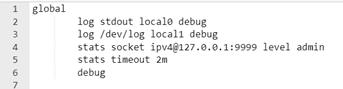

- Global section – used to define the log path, stats socket, debug mode, etc. The stats socket is used to provide health information about the participating nodes to HAProxy. Based on the health, we can increase or decrease the weights of any node.

Figure 3a: HAProxy config – global section.

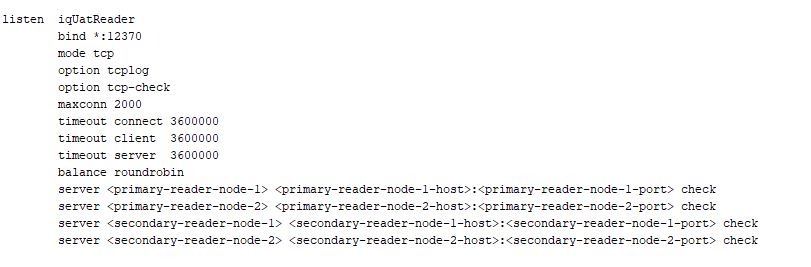

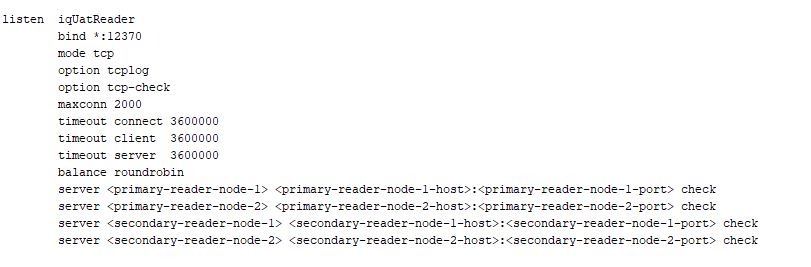

- Listen section – used to open a HAProxy listener socket. We can define multiple sockets for a single HAProxy instance. Also, the sockets can be either HTTP or TCP. For the database load balancing, we use the TCP load balancing technique. We also define the participating nodes, socket timeout in either direction, and the load balancing algorithm in this section.

Figure 3b: HAProxy config – listen section.

Creating Dynamic Nodes

We have set up a cluster of HAProxy instances to make it fault tolerant as well. These instances are brought up on UNIX boxes. A simple gateway sits in front of them to ensure high availability in case one of the instances go down. When we went live with the static configuration, HAProxy was unable to understand the load on the Sybase IQ reader nodes. This meant that our engineers needed to manually change the weight of the nodes to ensure that the traffic did not hit the impacted nodes. The points below explain how we made this dynamic.

- We also have cron jobs to continuously monitor the state of the nodes and change the weights in HAProxy via the configuration socket. The cron jobs look at the CPU and RAM usage of the Sybase IQ reader nodes along with the number of queries that are already running to identify the “load factor”. We have internal systems which keep on monitoring the health of servers but the load detection algorithms are generic and can hook up to any underlying metrics.

- Based on the load factor, we hit the stats socket (port 9999) to update the weights of each node.

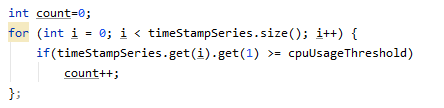

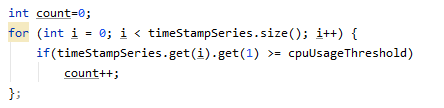

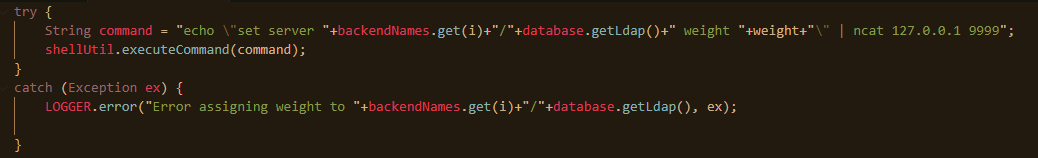

- As an example, below (Figure 4a) is a snippet of the code – we are looking for 5 consecutive occurrences of CPU over 95% which highlights CPU flatlining and thus the factor of the load on the reader node.

- Note: It is common to notice CPU spikes on Sybase IQ nodes as it tries to allocate maximum resources to every query. The nodes slow down and sometimes hang when the CPU flatlines above 95%.

Figure 4a: CPU flatlining check and lowering the weight.

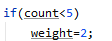

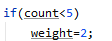

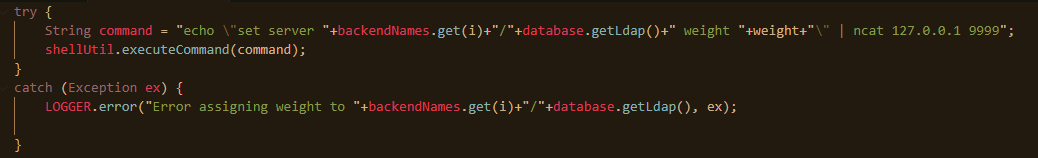

When we bring up HAProxy, all reader nodes are configured with a weight value of 1 (i.e. highest priority) which results in the load balancer redirecting all queries to reader nodes equally. Based on the load algorithm, the weight is increased (i.e. lower priority) on the fly so that the HAProxy load balancer can minimize the queries hitting the busy reader nodes. Here (Figure 4b) is how we change the weights on HAProxy dynamically via the stats/admin socket.

Figure 4b: Changing the reader node weights via the stats/admin socket.

Conclusions

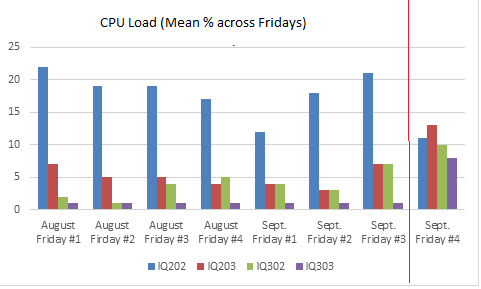

Referring to the diagram below, you will notice that we had skewed usage of our cluster before introducing HAProxy (before red line), which has been balanced post go-live (after the red line).

Figure 5: Showing loads pre and post usage of HAProxy.

- The recovery time for a primary node failure has gone down to a few seconds and is within our SLOs. Also, node recovery happens automatically because the weights are updated on HAProxy dynamically.

- We were able to achieve 99.9% cluster availability during business hours with this new architecture. Given that we can utilize multiple nodes at the same time, the downtime of one node doesn’t impact the whole cluster.

- Referring to the documentation, we performed stress tests on HAProxy clusters and found the latency to be observed at 5ms at the 99.9th percentile. This means that 1 in every 1000 requests/queries can have an additional latency of 5ms by just forwarding it through HAProxy, which is negligible.

- As part of this exercise, we also started capturing the read queries and their response times on our cluster. Out of the total set of queries fired on our cluster during the time range, 64% queries ran with sub-second response times and 98% queries ran with sub-minute response times. Also, no queries were impacted due to cluster availability issues.

- Going forward, we plan to take a closer look at the data model to achieve sub-second response times for 99% of our queries.

There are several ways to solve load balancing and failover of relational databases. In this blog post, we shared how we are using HAProxy and quantified the improvements. We hope this blog post was informative.

Want to learn more about exciting engineering opportunities at Goldman Sachs? Explore our careers page.

See https://www.gs.com/disclaimer/global_email for important risk disclosures, conflicts of interest, and other terms and conditions relating to this blog and your reliance on information contained in it.

Solutions

Curated Data Security MasterData AnalyticsPlotTool ProPortfolio AnalyticsGS QuantTransaction BankingGS DAP®Liquidity Investing¹ Real-time data can be impacted by planned system maintenance, connectivity or availability issues stemming from related third-party service providers, or other intermittent or unplanned technology issues.

Transaction Banking services are offered by Goldman Sachs Bank USA ("GS Bank") and its affiliates. GS Bank is a New York State chartered bank, a member of the Federal Reserve System and a Member FDIC. For additional information, please see Bank Regulatory Information.

² Source: Goldman Sachs Asset Management, as of March 31, 2025.

Mosaic is a service mark of Goldman Sachs & Co. LLC. This service is made available in the United States by Goldman Sachs & Co. LLC and outside of the United States by Goldman Sachs International, or its local affiliates in accordance with applicable law and regulations. Goldman Sachs International and Goldman Sachs & Co. LLC are the distributors of the Goldman Sachs Funds. Depending upon the jurisdiction in which you are located, transactions in non-Goldman Sachs money market funds are affected by either Goldman Sachs & Co. LLC, a member of FINRA, SIPC and NYSE, or Goldman Sachs International. For additional information contact your Goldman Sachs representative. Goldman Sachs & Co. LLC, Goldman Sachs International, Goldman Sachs Liquidity Solutions, Goldman Sachs Asset Management, L.P., and the Goldman Sachs funds available through Goldman Sachs Liquidity Solutions and other affiliated entities, are under the common control of the Goldman Sachs Group, Inc.

Goldman Sachs & Co. LLC is a registered U.S. broker-dealer and futures commission merchant, and is subject to regulatory capital requirements including those imposed by the SEC, the U.S. Commodity Futures Trading Commission (CFTC), the Chicago Mercantile Exchange, the Financial Industry Regulatory Authority, Inc. and the National Futures Association.

FOR INSTITUTIONAL USE ONLY - NOT FOR USE AND/OR DISTRIBUTION TO RETAIL AND THE GENERAL PUBLIC.

This material is for informational purposes only. It is not an offer or solicitation to buy or sell any securities.

THIS MATERIAL DOES NOT CONSTITUTE AN OFFER OR SOLICITATION IN ANY JURISDICTION WHERE OR TO ANY PERSON TO WHOM IT WOULD BE UNAUTHORIZED OR UNLAWFUL TO DO SO. Prospective investors should inform themselves as to any applicable legal requirements and taxation and exchange control regulations in the countries of their citizenship, residence or domicile which might be relevant. This material is provided for informational purposes only and should not be construed as investment advice or an offer or solicitation to buy or sell securities. This material is not intended to be used as a general guide to investing, or as a source of any specific investment recommendations, and makes no implied or express recommendations concerning the manner in which any client's account should or would be handled, as appropriate investment strategies depend upon the client's investment objectives.

United Kingdom: In the United Kingdom, this material is a financial promotion and has been approved by Goldman Sachs Asset Management International, which is authorized and regulated in the United Kingdom by the Financial Conduct Authority.

European Economic Area (EEA): This marketing communication is disseminated by Goldman Sachs Asset Management B.V., including through its branches ("GSAM BV"). GSAM BV is authorised and regulated by the Dutch Authority for the Financial Markets (Autoriteit Financiële Markten, Vijzelgracht 50, 1017 HS Amsterdam, The Netherlands) as an alternative investment fund manager ("AIFM") as well as a manager of undertakings for collective investment in transferable securities ("UCITS"). Under its licence as an AIFM, the Manager is authorized to provide the investment services of (i) reception and transmission of orders in financial instruments; (ii) portfolio management; and (iii) investment advice. Under its licence as a manager of UCITS, the Manager is authorized to provide the investment services of (i) portfolio management; and (ii) investment advice.

Information about investor rights and collective redress mechanisms are available on www.gsam.com/responsible-investing (section Policies & Governance). Capital is at risk. Any claims arising out of or in connection with the terms and conditions of this disclaimer are governed by Dutch law.

To the extent it relates to custody activities, this financial promotion is disseminated by Goldman Sachs Bank Europe SE ("GSBE"), including through its authorised branches. GSBE is a credit institution incorporated in Germany and, within the Single Supervisory Mechanism established between those Member States of the European Union whose official currency is the Euro, subject to direct prudential supervision by the European Central Bank (Sonnemannstrasse 20, 60314 Frankfurt am Main, Germany) and in other respects supervised by German Federal Financial Supervisory Authority (Bundesanstalt für Finanzdienstleistungsaufsicht, BaFin) (Graurheindorfer Straße 108, 53117 Bonn, Germany; website: www.bafin.de) and Deutsche Bundesbank (Hauptverwaltung Frankfurt, Taunusanlage 5, 60329 Frankfurt am Main, Germany).

Switzerland: For Qualified Investor use only - Not for distribution to general public. This is marketing material. This document is provided to you by Goldman Sachs Bank AG, Zürich. Any future contractual relationships will be entered into with affiliates of Goldman Sachs Bank AG, which are domiciled outside of Switzerland. We would like to remind you that foreign (Non-Swiss) legal and regulatory systems may not provide the same level of protection in relation to client confidentiality and data protection as offered to you by Swiss law.

Asia excluding Japan: Please note that neither Goldman Sachs Asset Management (Hong Kong) Limited ("GSAMHK") or Goldman Sachs Asset Management (Singapore) Pte. Ltd. (Company Number: 201329851H ) ("GSAMS") nor any other entities involved in the Goldman Sachs Asset Management business that provide this material and information maintain any licenses, authorizations or registrations in Asia (other than Japan), except that it conducts businesses (subject to applicable local regulations) in and from the following jurisdictions: Hong Kong, Singapore, India and China. This material has been issued for use in or from Hong Kong by Goldman Sachs Asset Management (Hong Kong) Limited and in or from Singapore by Goldman Sachs Asset Management (Singapore) Pte. Ltd. (Company Number: 201329851H).

Australia: This material is distributed by Goldman Sachs Asset Management Australia Pty Ltd ABN 41 006 099 681, AFSL 228948 (‘GSAMA’) and is intended for viewing only by wholesale clients for the purposes of section 761G of the Corporations Act 2001 (Cth). This document may not be distributed to retail clients in Australia (as that term is defined in the Corporations Act 2001 (Cth)) or to the general public. This document may not be reproduced or distributed to any person without the prior consent of GSAMA. To the extent that this document contains any statement which may be considered to be financial product advice in Australia under the Corporations Act 2001 (Cth), that advice is intended to be given to the intended recipient of this document only, being a wholesale client for the purposes of the Corporations Act 2001 (Cth). Any advice provided in this document is provided by either of the following entities. They are exempt from the requirement to hold an Australian financial services licence under the Corporations Act of Australia and therefore do not hold any Australian Financial Services Licences, and are regulated under their respective laws applicable to their jurisdictions, which differ from Australian laws. Any financial services given to any person by these entities by distributing this document in Australia are provided to such persons pursuant to the respective ASIC Class Orders and ASIC Instrument mentioned below.

- Goldman Sachs Asset Management, LP (GSAMLP), Goldman Sachs & Co. LLC (GSCo), pursuant ASIC Class Order 03/1100; regulated by the US Securities and Exchange Commission under US laws.

- Goldman Sachs Asset Management International (GSAMI), Goldman Sachs International (GSI), pursuant to ASIC Class Order 03/1099; regulated by the Financial Conduct Authority; GSI is also authorized by the Prudential Regulation Authority, and both entities are under UK laws.

- Goldman Sachs Asset Management (Singapore) Pte. Ltd. (GSAMS), pursuant to ASIC Class Order 03/1102; regulated by the Monetary Authority of Singapore under Singaporean laws

- Goldman Sachs Asset Management (Hong Kong) Limited (GSAMHK), pursuant to ASIC Class Order 03/1103 and Goldman Sachs (Asia) LLC (GSALLC), pursuant to ASIC Instrument 04/0250; regulated by the Securities and Futures Commission of Hong Kong under Hong Kong laws

No offer to acquire any interest in a fund or a financial product is being made to you in this document. If the interests or financial products do become available in the future, the offer may be arranged by GSAMA in accordance with section 911A(2)(b) of the Corporations Act. GSAMA holds Australian Financial Services Licence No. 228948. Any offer will only be made in circumstances where disclosure is not required under Part 6D.2 of the Corporations Act or a product disclosure statement is not required to be given under Part 7.9 of the Corporations Act (as relevant).

FOR DISTRIBUTION ONLY TO FINANCIAL INSTITUTIONS, FINANCIAL SERVICES LICENSEES AND THEIR ADVISERS. NOT FOR VIEWING BY RETAIL CLIENTS OR MEMBERS OF THE GENERAL PUBLIC

Canada: This presentation has been communicated in Canada by GSAM LP, which is registered as a portfolio manager under securities legislation in all provinces of Canada and as a commodity trading manager under the commodity futures legislation of Ontario and as a derivatives adviser under the derivatives legislation of Quebec. GSAM LP is not registered to provide investment advisory or portfolio management services in respect of exchange-traded futures or options contracts in Manitoba and is not offering to provide such investment advisory or portfolio management services in Manitoba by delivery of this material.

Japan: This material has been issued or approved in Japan for the use of professional investors defined in Article 2 paragraph (31) of the Financial Instruments and Exchange Law ("FIEL"). Also, any description regarding investment strategies on or funds as collective investment scheme under Article 2 paragraph (2) item 5 or item 6 of FIEL has been approved only for Qualified Institutional Investors defined in Article 10 of Cabinet Office Ordinance of Definitions under Article 2 of FIEL.

Interest Rate Benchmark Transition Risks: This transaction may require payments or calculations to be made by reference to a benchmark rate ("Benchmark"), which will likely soon stop being published and be replaced by an alternative rate, or will be subject to substantial reform. These changes could have unpredictable and material consequences to the value, price, cost and/or performance of this transaction in the future and create material economic mismatches if you are using this transaction for hedging or similar purposes. Goldman Sachs may also have rights to exercise discretion to determine a replacement rate for the Benchmark for this transaction, including any price or other adjustments to account for differences between the replacement rate and the Benchmark, and the replacement rate and any adjustments we select may be inconsistent with, or contrary to, your interests or positions. Other material risks related to Benchmark reform can be found at https://www.gs.com/interest-rate-benchmark-transition-notice. Goldman Sachs cannot provide any assurances as to the materialization, consequences, or likely costs or expenses associated with any of the changes or risks arising from Benchmark reform, though they may be material. You are encouraged to seek independent legal, financial, tax, accounting, regulatory, or other appropriate advice on how changes to the Benchmark could impact this transaction.

Confidentiality: No part of this material may, without GSAM's prior written consent, be (i) copied, photocopied or duplicated in any form, by any means, or (ii) distributed to any person that is not an employee, officer, director, or authorized agent of the recipient.

GSAM Services Private Limited (formerly Goldman Sachs Asset Management (India) Private Limited) acts as the Investment Advisor, providing non-binding non-discretionary investment advice to dedicated offshore mandates, involving Indian and overseas securities, managed by GSAM entities based outside India. Members of the India team do not participate in the investment decision making process.