In a firm of nearly 45,000 employees from across the globe, new ideas are generated daily. But in the buzz of everyday business, complex transactions, and never-ending activity, what support exists to take these ideas and really execute on them? This was the premise that sparked the beginnings of Goldman Sachs' internal incubator GS Accelerate. Founded in 2018, GS Accelerate is a firmwide platform that aims to support the firm’s long-standing culture of innovation and experimentation and seeds commercial, innovative, and growth businesses for Goldman Sachs. Accelerate provides our people with the capital, resources, and support to transform ideas into viable new businesses and products for our clients, with a focus on the firm’s growth. Funded businesses focus on building client solutions, leveraging Goldman Sachs’ unique strengths and offering employees the opportunity to work in a fast-paced, entrepreneurial environment.

GS Accelerate was where the X25 project began. Funded through Accelerate in 2020, X25 seeks to provide a digital-first, cloud-based solution to transform the financial product landscape. X25's engineers leveraged a 150-year-old firm with its resources and deep bench of experience to pioneer cutting-edge digital transformation in the alternative investments industry. In this blog post, we will share how the X25 team laid the foundation of our startup through exploring an AWS serverless solution for our client-facing APIs.

X25 Operating Environment

X25 is a multi-tenant platform for servicing a specific class of complex financial instruments. The majority of computations are batch operations triggered by each instrument's schedule, which defines one or more events that need(s) to be processed daily. The timing of these events leads to multiple computations across instruments triggering simultaneously, with higher peaks of activity at specific days in the business calendar. This operating domain exerts a highly variant compute demand characterized by long periods of relatively low activity interspersed with brief periods of very high demand. Compute demand can easily vary 1000-fold from hour-to-hour. We wanted our cost for the infrastructure to align with demand, so exploring AWS serverless options was an obvious decision. Serverless architecture allows customers to scale up and scale down on demand.

In addition to these scheduled operations, X25 exposes an API to its tenants, which is used by the X25 web portal and any tenant-authored integration services. The API does not require the same scale as the back-end scheduled events. X25 is built for only a relatively small number of tenants (less than 100) who only need access to the API intermittently. The advantage of serverless here is not limited to elastic scaling; serverless also means that there are no servers to manage, which reduces operational overhead. Unlike back-end scheduled event handling, latency was an important consideration for the X25 API, as any noticeable latency would impair the user experience.

Cloud Vendor Selection

In order to best deliver the firm's services, X25's first decision was to decide which cloud vendor(s) to select. Teams that are new to cloud computing may wonder whether they should be developing their software in a cloud-neutral fashion and be able to switch vendors if required, or if should they select a target vendor and lean in to that vendor's services and APIs. The former option is quite challenging as the services and APIs offered by each vendor vary. Aiming to create a cloud-neutral solution means that only baseline fundamental infrastructure-as-a-service offerings (which are most easily switchable) should be used. However, such an approach leads to more code for these teams to develop, and therefore results in a longer time to market. On the other hand, leaning in to a specific vendor's platform and software-as-a-service solutions locks teams into that vendor, which has its own drawbacks.

X25 follows a microservice architecture where service-to-service communication is conducted strictly via HTTP URLs and each service is developed, tested, and deployed independently. Each microservice can then, in theory, select a different cloud vendor, but each would lean in to that vendor's offerings. In this way, the complexity of a vendor neutral solution and single vendor lock-in are avoided. Migrating a single microservice is still a re-engineering effort, but is quite feasible if microservices are sufficiently granular. Migration from one vendor to another can then be done service by service to mitigate the risk of a big bang switchover to another cloud vendor.

That said, in X25's initial minimum viable product (MVP), all services were elected to run on AWS’ cloud infrastructure, primarily driven by the fact that Goldman Sachs has already successfully migrated/built multiple businesses onto AWS.

AWS Serverless API

In building the X25 API on AWS, we experimented with AWS's two serverless compute options: Lambda and ECS Fargate.

Lambda is event-driven and provisions compute on-demand. Customers only pay for what they use, in terms of memory and computation time. ECS is AWS's managed container orchestration service. ECS can run containers on EC2 instances provisioned by the customer or, with ECS Fargate, on instances provisioned and managed by AWS. As a container orchestrator, ECS can only work with containers. When initially launched, Lambda only worked with ZIP files containing functions run on an AWS provided container. More recently, AWS has added support for customer containers on Lambda.

Lambda provisions compute on-demand and will re-use a previously provisioned compute for subsequent events. However, after a period of inactivity, AWS will reclaim the compute. A subsequent request then requires a cold-start, which incurs some latency. Also, during higher periods of demand, AWS will spin-up additional computes, each of which incurs a cold start. Customers can configure a minimum provisioned concurrency to ensure that a defined number of instances are always available. Having this baseline of compute availability incurs a cost even when there is zero activity.

With ECS Fargate, customers specify a minimum and maximum number of containers to continuously run (similar to Lambda's provisioned concurrency), plus an auto-scaling policy. The policy can be driven by different metrics, such as request rate, CPU, or memory usage. By specifying suitable thresholds, additional instances can be provisioned before the current number reaches capacity/saturates. These can then be scaled down as the demand subsides.

The advantage of Lambda is the ability to scale on-demand without any policy to manage, and the ability to scale down to zero when there is no demand. On the downside, however, latency is not predictable and may spike from cold starts. ECS Fargate provides a minimal, always-available compute and is therefore more predictable with lower latency, but does incur some costs when there is no demand.

ECS Fargate is tailored for containerized microservices, while Lambda is more geared towards function-as-a-service (FaaS) architectures. However, with AWS adding support for containers and provisioned concurrency to Lambda, the distinction versus ECS Fargate starts to blur. The X25 team initially explored the Lambda .ZIP file approach.

X25 services are built using the Python Flask framework. In deploying to Lambda, the team simply packaged the whole application in a single .ZIP file fronted by an application load balancer. AWS has documented that this is a Lambda anti-pattern and recommends that when using Lambda, customers should embrace FaaS and deploy multiple small Lambda functions, potentially one per API route, fronted with an AWS API Gateway. Essentially, the API gateway becomes the REST framework. While this may be the best way to use Lambda, it does make testing more challenging, as an AWS environment is required. By building a standalone flask application, it's easier for developers to spin up and test on their local machines.

Building and deploying multiple separate functions introduces additional CI/CD challenges. Since each function can, in theory, be deployed separately, if one is careless, it is possible that a production environment may contain a combination of separately-deployed function versions that have not been tested together in any pre-production environment. Deploying the functions together as single container does not suffer from this problem, as the container is a single, atomic deployable unit.

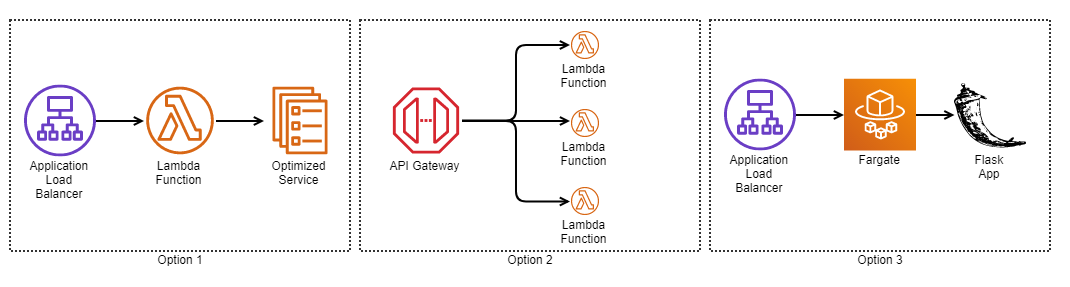

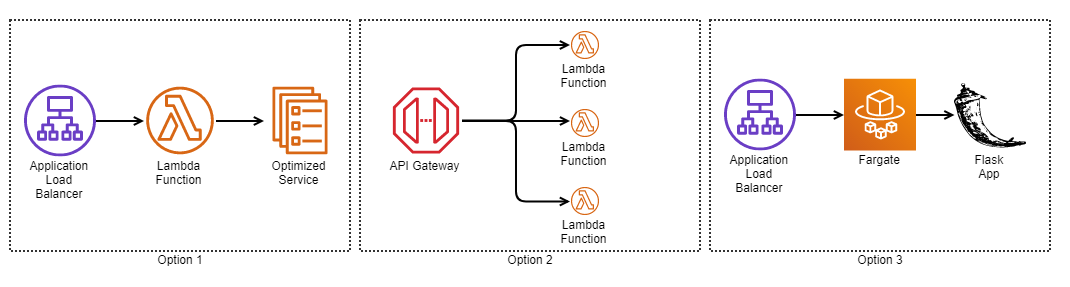

When Flask-based servers were first tested, they exhibited a noticeable start-up delay when creating the Flask application and connecting to the database. This latency is an unacceptable delay in the portal user experience. This led us to the following choices; the team could either:

- Stick with the Lambda monolith but find a way to optimize the application startup.

- Migrate to Lambda FaaS.

- Migrate to ECS Fargate with the existing Flask app.

With option 1, there are a few optimizations that could be explored. Flask could be replaced with a framework that offers a faster time to make the first API call (e.g. FastAPI), then, explore leveraging an AWS database connection pooling for faster database connectivity.

Option 2 would arguably be the most natural fit for using Lambda as an API. However, this would require investment into our CI/CD infrastructure (e.g. ensuring a simpler way for developers to test locally in a non-AWS environment) to ensure that combinations of functions cannot be deployed until proven in the pre-production environment.

The X25 team ultimately chose option 3, as it reduces latency, requires the least additional investment, and is a very common, traditional solution that is well-known and proven by other teams within GS.

X25 developers now enjoy a streamlined, low friction CI/CD as a consequence of this decision:

- Developers can continue to spin up their services on their local machine and test against localhost.

- Multiple developers can work separately, and independently, on multiple feature branches within the same service. Each feature branch maps to a distinct service container in Fargate. Each container has a feature branch specific URL for client testing.

- Latency spikes accessing the service APIs have been eliminated.

Summary

- Either Lambda or ECS Fargate can be used to build serverless applications. When using Lambda, it is essential that teams optimize any initialization logic. Otherwise, cold-start times could become unacceptable. Provisioned concurrency does not offer a complete solution, as cold-starts will still occur during periods of high demand.

- It's not obvious that the Lambda monolith is an anti-pattern. Both the Lambda monolith and the granular Lambda FaaS solutions have their pros and cons, and both could suffer from high latency on cold-starts without sufficient care.

- Building a standalone REST service as a containerized image has its benefits as all the request routing, request validation, authentication and authorization can all be provided by the REST framework (Flask and most other frameworks provide all the hooks needed). Developers can then easily test locally.

- For operating environments at lower scaling demand, the ECS Fargate container-based solution offers the simplest option and lowest friction for developers. It also means that developers do not need to worry about optimizing their startup times.

What's Next for the X25 Team?

Ultimately, the X25 team aims to deploy its APIs across multiple regions and provide an always-available solution, even if an entire AWS region fails. These globally-deployed services need to be able to handle any event in any region at any time. This introduces a new challenge in interacting with a database. The team needs to ensure that multiple concurrent events cannot create an inconsistent state in the databases. In a future blog, the team will explore how an always-available, globally-consistent DB architecture meets this challenge.

It's been a truly energizing journey building X25 through GS Accelerate, and assessing the serverless options for building our APIs is just one of the myriad of critical decisions that have been made to move our cloud-native business forward.

About GS Accelerate

Since launching in 2018, Goldman Sachs employees have submitted more than 1,800 ideas, many of which, including X25, have been funded, resulting in seven new products available for Goldman Sachs clients and people. GS Accelerate businesses are hiring across teams and functions in offices across the globe including New York, Dallas, and Birmingham. To view open roles, visit here.

See https://www.gs.com/disclaimer/global_email for important risk disclosures, conflicts of interest, and other terms and conditions relating to this blog and your reliance on information contained in it.

Solutions

Curated Data Security MasterData AnalyticsPlotTool ProPortfolio AnalyticsGS QuantTransaction BankingGS DAP®Liquidity Investing¹ Real-time data can be impacted by planned system maintenance, connectivity or availability issues stemming from related third-party service providers, or other intermittent or unplanned technology issues.

Transaction Banking services are offered by Goldman Sachs Bank USA ("GS Bank") and its affiliates. GS Bank is a New York State chartered bank, a member of the Federal Reserve System and a Member FDIC. For additional information, please see Bank Regulatory Information.

² Source: Goldman Sachs Asset Management, as of March 31, 2025.

Mosaic is a service mark of Goldman Sachs & Co. LLC. This service is made available in the United States by Goldman Sachs & Co. LLC and outside of the United States by Goldman Sachs International, or its local affiliates in accordance with applicable law and regulations. Goldman Sachs International and Goldman Sachs & Co. LLC are the distributors of the Goldman Sachs Funds. Depending upon the jurisdiction in which you are located, transactions in non-Goldman Sachs money market funds are affected by either Goldman Sachs & Co. LLC, a member of FINRA, SIPC and NYSE, or Goldman Sachs International. For additional information contact your Goldman Sachs representative. Goldman Sachs & Co. LLC, Goldman Sachs International, Goldman Sachs Liquidity Solutions, Goldman Sachs Asset Management, L.P., and the Goldman Sachs funds available through Goldman Sachs Liquidity Solutions and other affiliated entities, are under the common control of the Goldman Sachs Group, Inc.

Goldman Sachs & Co. LLC is a registered U.S. broker-dealer and futures commission merchant, and is subject to regulatory capital requirements including those imposed by the SEC, the U.S. Commodity Futures Trading Commission (CFTC), the Chicago Mercantile Exchange, the Financial Industry Regulatory Authority, Inc. and the National Futures Association.

FOR INSTITUTIONAL USE ONLY - NOT FOR USE AND/OR DISTRIBUTION TO RETAIL AND THE GENERAL PUBLIC.

This material is for informational purposes only. It is not an offer or solicitation to buy or sell any securities.

THIS MATERIAL DOES NOT CONSTITUTE AN OFFER OR SOLICITATION IN ANY JURISDICTION WHERE OR TO ANY PERSON TO WHOM IT WOULD BE UNAUTHORIZED OR UNLAWFUL TO DO SO. Prospective investors should inform themselves as to any applicable legal requirements and taxation and exchange control regulations in the countries of their citizenship, residence or domicile which might be relevant. This material is provided for informational purposes only and should not be construed as investment advice or an offer or solicitation to buy or sell securities. This material is not intended to be used as a general guide to investing, or as a source of any specific investment recommendations, and makes no implied or express recommendations concerning the manner in which any client's account should or would be handled, as appropriate investment strategies depend upon the client's investment objectives.

United Kingdom: In the United Kingdom, this material is a financial promotion and has been approved by Goldman Sachs Asset Management International, which is authorized and regulated in the United Kingdom by the Financial Conduct Authority.

European Economic Area (EEA): This marketing communication is disseminated by Goldman Sachs Asset Management B.V., including through its branches ("GSAM BV"). GSAM BV is authorised and regulated by the Dutch Authority for the Financial Markets (Autoriteit Financiële Markten, Vijzelgracht 50, 1017 HS Amsterdam, The Netherlands) as an alternative investment fund manager ("AIFM") as well as a manager of undertakings for collective investment in transferable securities ("UCITS"). Under its licence as an AIFM, the Manager is authorized to provide the investment services of (i) reception and transmission of orders in financial instruments; (ii) portfolio management; and (iii) investment advice. Under its licence as a manager of UCITS, the Manager is authorized to provide the investment services of (i) portfolio management; and (ii) investment advice.

Information about investor rights and collective redress mechanisms are available on www.gsam.com/responsible-investing (section Policies & Governance). Capital is at risk. Any claims arising out of or in connection with the terms and conditions of this disclaimer are governed by Dutch law.

To the extent it relates to custody activities, this financial promotion is disseminated by Goldman Sachs Bank Europe SE ("GSBE"), including through its authorised branches. GSBE is a credit institution incorporated in Germany and, within the Single Supervisory Mechanism established between those Member States of the European Union whose official currency is the Euro, subject to direct prudential supervision by the European Central Bank (Sonnemannstrasse 20, 60314 Frankfurt am Main, Germany) and in other respects supervised by German Federal Financial Supervisory Authority (Bundesanstalt für Finanzdienstleistungsaufsicht, BaFin) (Graurheindorfer Straße 108, 53117 Bonn, Germany; website: www.bafin.de) and Deutsche Bundesbank (Hauptverwaltung Frankfurt, Taunusanlage 5, 60329 Frankfurt am Main, Germany).

Switzerland: For Qualified Investor use only - Not for distribution to general public. This is marketing material. This document is provided to you by Goldman Sachs Bank AG, Zürich. Any future contractual relationships will be entered into with affiliates of Goldman Sachs Bank AG, which are domiciled outside of Switzerland. We would like to remind you that foreign (Non-Swiss) legal and regulatory systems may not provide the same level of protection in relation to client confidentiality and data protection as offered to you by Swiss law.

Asia excluding Japan: Please note that neither Goldman Sachs Asset Management (Hong Kong) Limited ("GSAMHK") or Goldman Sachs Asset Management (Singapore) Pte. Ltd. (Company Number: 201329851H ) ("GSAMS") nor any other entities involved in the Goldman Sachs Asset Management business that provide this material and information maintain any licenses, authorizations or registrations in Asia (other than Japan), except that it conducts businesses (subject to applicable local regulations) in and from the following jurisdictions: Hong Kong, Singapore, India and China. This material has been issued for use in or from Hong Kong by Goldman Sachs Asset Management (Hong Kong) Limited and in or from Singapore by Goldman Sachs Asset Management (Singapore) Pte. Ltd. (Company Number: 201329851H).

Australia: This material is distributed by Goldman Sachs Asset Management Australia Pty Ltd ABN 41 006 099 681, AFSL 228948 (‘GSAMA’) and is intended for viewing only by wholesale clients for the purposes of section 761G of the Corporations Act 2001 (Cth). This document may not be distributed to retail clients in Australia (as that term is defined in the Corporations Act 2001 (Cth)) or to the general public. This document may not be reproduced or distributed to any person without the prior consent of GSAMA. To the extent that this document contains any statement which may be considered to be financial product advice in Australia under the Corporations Act 2001 (Cth), that advice is intended to be given to the intended recipient of this document only, being a wholesale client for the purposes of the Corporations Act 2001 (Cth). Any advice provided in this document is provided by either of the following entities. They are exempt from the requirement to hold an Australian financial services licence under the Corporations Act of Australia and therefore do not hold any Australian Financial Services Licences, and are regulated under their respective laws applicable to their jurisdictions, which differ from Australian laws. Any financial services given to any person by these entities by distributing this document in Australia are provided to such persons pursuant to the respective ASIC Class Orders and ASIC Instrument mentioned below.

- Goldman Sachs Asset Management, LP (GSAMLP), Goldman Sachs & Co. LLC (GSCo), pursuant ASIC Class Order 03/1100; regulated by the US Securities and Exchange Commission under US laws.

- Goldman Sachs Asset Management International (GSAMI), Goldman Sachs International (GSI), pursuant to ASIC Class Order 03/1099; regulated by the Financial Conduct Authority; GSI is also authorized by the Prudential Regulation Authority, and both entities are under UK laws.

- Goldman Sachs Asset Management (Singapore) Pte. Ltd. (GSAMS), pursuant to ASIC Class Order 03/1102; regulated by the Monetary Authority of Singapore under Singaporean laws

- Goldman Sachs Asset Management (Hong Kong) Limited (GSAMHK), pursuant to ASIC Class Order 03/1103 and Goldman Sachs (Asia) LLC (GSALLC), pursuant to ASIC Instrument 04/0250; regulated by the Securities and Futures Commission of Hong Kong under Hong Kong laws

No offer to acquire any interest in a fund or a financial product is being made to you in this document. If the interests or financial products do become available in the future, the offer may be arranged by GSAMA in accordance with section 911A(2)(b) of the Corporations Act. GSAMA holds Australian Financial Services Licence No. 228948. Any offer will only be made in circumstances where disclosure is not required under Part 6D.2 of the Corporations Act or a product disclosure statement is not required to be given under Part 7.9 of the Corporations Act (as relevant).

FOR DISTRIBUTION ONLY TO FINANCIAL INSTITUTIONS, FINANCIAL SERVICES LICENSEES AND THEIR ADVISERS. NOT FOR VIEWING BY RETAIL CLIENTS OR MEMBERS OF THE GENERAL PUBLIC

Canada: This presentation has been communicated in Canada by GSAM LP, which is registered as a portfolio manager under securities legislation in all provinces of Canada and as a commodity trading manager under the commodity futures legislation of Ontario and as a derivatives adviser under the derivatives legislation of Quebec. GSAM LP is not registered to provide investment advisory or portfolio management services in respect of exchange-traded futures or options contracts in Manitoba and is not offering to provide such investment advisory or portfolio management services in Manitoba by delivery of this material.

Japan: This material has been issued or approved in Japan for the use of professional investors defined in Article 2 paragraph (31) of the Financial Instruments and Exchange Law ("FIEL"). Also, any description regarding investment strategies on or funds as collective investment scheme under Article 2 paragraph (2) item 5 or item 6 of FIEL has been approved only for Qualified Institutional Investors defined in Article 10 of Cabinet Office Ordinance of Definitions under Article 2 of FIEL.

Interest Rate Benchmark Transition Risks: This transaction may require payments or calculations to be made by reference to a benchmark rate ("Benchmark"), which will likely soon stop being published and be replaced by an alternative rate, or will be subject to substantial reform. These changes could have unpredictable and material consequences to the value, price, cost and/or performance of this transaction in the future and create material economic mismatches if you are using this transaction for hedging or similar purposes. Goldman Sachs may also have rights to exercise discretion to determine a replacement rate for the Benchmark for this transaction, including any price or other adjustments to account for differences between the replacement rate and the Benchmark, and the replacement rate and any adjustments we select may be inconsistent with, or contrary to, your interests or positions. Other material risks related to Benchmark reform can be found at https://www.gs.com/interest-rate-benchmark-transition-notice. Goldman Sachs cannot provide any assurances as to the materialization, consequences, or likely costs or expenses associated with any of the changes or risks arising from Benchmark reform, though they may be material. You are encouraged to seek independent legal, financial, tax, accounting, regulatory, or other appropriate advice on how changes to the Benchmark could impact this transaction.

Confidentiality: No part of this material may, without GSAM's prior written consent, be (i) copied, photocopied or duplicated in any form, by any means, or (ii) distributed to any person that is not an employee, officer, director, or authorized agent of the recipient.

GSAM Services Private Limited (formerly Goldman Sachs Asset Management (India) Private Limited) acts as the Investment Advisor, providing non-binding non-discretionary investment advice to dedicated offshore mandates, involving Indian and overseas securities, managed by GSAM entities based outside India. Members of the India team do not participate in the investment decision making process.